The Time Eye: Nuts and Bolts of a Biological Future Detector

Wednesday, 21 September, 2016

Rubbing my temples and squinting, I foresee that no less a science writer than James Gleick will very shortly be publishing a book called Time Travel. Unlike his most famous book, Chaos, which was incredibly forward looking—introducing a whole generation to a really cool new concept, “the butterfly effect” (i.e., the way a butterfly flapping its wings in Hong Kong influences the weather in New York a week later)—Time Travel is … er, will be … oddly backward looking, a retrospective view of H.G. Wells’ sci-fi trope and its impact on our culture. It will only barely, glancingly, remark on the emerging science that is destined to make time travel, or at least time-traveling information, a reality.

I foresee that despite brief obligatory musings on John Wheeler and wormholes, Gleick will use the word “retrocausation” only once in the book: “Retrocausation is now a topic,” he will say, and leave it at that. Mostly, he will reiterate again and again how time travel is obviously impossible, and that it’s only a meme, a literary device, a metaphor for our increasingly sped-up and time-obsessed society. Causes precede effects, and that’s that.

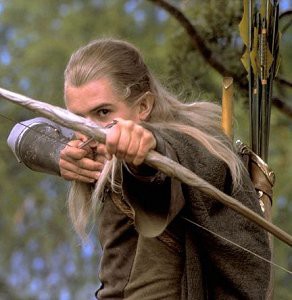

God really doesn’t play dice; he’s truly a master archer instead, a divine Legolas who can swiftly turn and shoot causal arrows in both directions.

I’m a big admirer of Gleick, but this will be a pretty unfortunate oversight on his part. Retrocausation is indeed a topic, one that is about to sweep over our culture like a tidal wave. How did he miss it?

The idea has been there from the beginning in modern physics’ upper echelons: Effects can theoretically precede their causes. But only in the past decade or so has the technology finally existed to test the idea. An increasing number of truly mind-blowing experiments are now confirming what Yakir Aharonov, for example, intuited already in the 1960s—that every single causal event in our world, every single interaction between two particles, is not only propogating an influence “forward” in the intuitive billiard-ball fashion but also carrying channels of influence backward from the future to the present and from the present to the past.

Until now, physicists have misinterpreted this whole half of causation as “randomness”—quantum indeterminacy. But Einstein never liked randomness as an inherent property of nature, and Aharonov wasn’t satisfied either. Newly developed experiments utilizing methods to “weakly measure” particles at one point in time and then conventionally measure a subset of them at a second, later point in time—or “post-selection”—are showing Aharonov was right and that Einstein’s intuitions were on the mark: God really doesn’t play dice; he’s truly a master archer instead, a divine Legolas who can swiftly turn and shoot causal arrows in both directions. Every interaction a particle has with its environment or with an experimental measurement apparatus perturbs seemingly “random” aspects of its behavior, such as its spin, at earlier points in its history. In 2009, a team at Rochester University actually used measurement of a portion of a laser beam at time point B (post-selection) to deviate those photons when measured, weakly enough to not disturb them too much, at an earlier time point A—retrocausation, in other words.

Other hints of causality’s two-faced-ness have been staring physicists in their one-way faces for a long time. Take for example the curious phenomenon known as “frustrated spontaneous emission.” It sounds like an embarrassing sexual complaint that psychotherapy might help with; actually it is a funny thing that happens to light-emitting atoms when they are put in surroundings that cannot absorb light. Ordinarily, atoms decay at a predictably random rate; but when there is nothing to receive their emitted photons, they get, well, frustrated, and withhold their photons. How do they “know” there is nowhere for their photons to go? According to physicist Ken Wharton, the answer is, again, retrocausation: The “random” decay of an atom is really determined retrocausally by the receiver of the photon it will emit. No receiver, then no decay. As in the Rochester experiment, some information is being passed, via that emitted photon (whenever there eventually is one), backward in time.

Other hints of causality’s two-faced-ness have been staring physicists in their one-way faces for a long time. Take for example the curious phenomenon known as “frustrated spontaneous emission.” It sounds like an embarrassing sexual complaint that psychotherapy might help with; actually it is a funny thing that happens to light-emitting atoms when they are put in surroundings that cannot absorb light. Ordinarily, atoms decay at a predictably random rate; but when there is nothing to receive their emitted photons, they get, well, frustrated, and withhold their photons. How do they “know” there is nowhere for their photons to go? According to physicist Ken Wharton, the answer is, again, retrocausation: The “random” decay of an atom is really determined retrocausally by the receiver of the photon it will emit. No receiver, then no decay. As in the Rochester experiment, some information is being passed, via that emitted photon (whenever there eventually is one), backward in time.Or consider entanglement, every anomalist’s favorite quantum quirk. When particles are created together or interact in some way, their characteristics become correlated such that they cannot be described independently of each other; they become part of a single entangled state. If you then send these two particles—photons, say—way far away from each other, even to the ends of the universe, they will behave identically when one of them is measured. If you measure photon A and find that it has certain spin or polarity, the other one is guaranteed to have an identical or corresponding value, as if they somehow communicated with each other long-distance to get their stories straight. This phenomenon was originally a prediction made by Einstein and two of his colleagues, Boris Podolsky and Nathan Rosen (collectively known as “EPR”), as a way to show that quantum physics must be incomplete. Entanglement seemed paradoxical, and thus impossible, because somehow it would involve information traveling between those particles at a speed faster than light (i.e., instantaneously), thus violating the theory of Relativity. Yet in 1964, a CERN physicist named John Bell published a theorem proving that the EPR prediction actually held; subsequent experiments supported the existence of such states.

As told by David Kaiser in his fascinating book How the Hippies Saved Physics, Bell’s theorem lies at the heart of today’s revolutions in quantum computing, telecommunications, and cryptography. However, no one has ever been able to explain how entanglement works; it is just another of those many quantum bizarreries that are supposed to be just taken on faith. But Cambridge University philosopher and specialist in the physics of time, Huw Price, thinks the answer is to be found, once again, in retrocausality: The measurement that affects one of the two entangled particles sends information back in time to the point when they became entangled in the first place; thus that future event in the life of one of the particles became part of the destiny of the other particle—a kind of zig-zagging causal path.

Every time a photon bumps into another photon, that bump doesn’t just alter or nudge the properties of those particles in a forward direction; it is a transformation and an exchange of information in both directions. To shift to the quantum computing idiom of someone like Seth Lloyd, it “flips bits,” correlating the measurable properties of those photons and sending information also into the past of both photons, to influence how each behaved the last time it interacted with another particle, and how those other particles behaved in their previous interactions … and so on, back to the beginning of the universe.

It is really important to think about all this correctly. It does not mean that, tucked away safely in a few laboratories there are funny, exceptional situations where trivial eensy weensy effects precede trivial eensy weensy causes. No, the entire “way things go” is a compromise or handshake agreement between the ordinary, easy-to-understand “one thing after another” behavior of things that Newton said was all there is, and another opposite vector of influence interweaving with and deflecting and giving shape to events, a vector of influence that Newton’s brilliantly persuasive Laws effectively hid from our view for three whole centuries. It took the first generation of quantum physicists to detect that Newton’s Laws were flawed, that there was something else going on; it took nearly a century more of research and theorizing to begin to confirm that maybe this thing they had been calling “indeterminacy” all along was hiding the missing retrocausal link to our understandings of cause and effect.

A Future Detector

Now, if it is possible to detect the future in apparatuses in laboratories, you can bet that life, too, has found a way to build a future detector on the same principles, and that it is possibly even basic to life’s functioning. Post-selection, as Paul Davies has suggested, is possibly the basis for the arising of life; I argued in a previous post that it is responsible for the basic skewing or “queering” function of life, the way it “tunnels” toward order and complexity more often than bare chance of randomly jostling chemicals would predict. A biological future detector using weak measurement and post-selection would have been the first sense, the basis of all later organismic guidance systems.

Conceptually, a future detector is not unlike a simple eye, but in the time dimension instead of space.

Consider: A single-celled paramecium can hunt and learn from its experience without anything we would recognize as sense organs or a nervous system. Humble slime molds too can learn and solve mazes—their behavior is nonrandom, yet they lack a “brain” or any complex sense organs to give them information. You can bet that in both these cases there is some more basic intelligence at work than mere trial and error, and that it is precisely the cellular pre-sense I have proposed. Indeed, as if to confirm my speculations, a study reported by Fernando Alvarez in the Journal of Scientific Exploration shows that our friends the planarian worms are actually precognitive of noxious stimuli up to a minute in advance. If this is the case, they are truly little prophets.

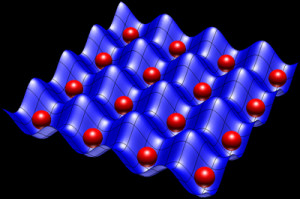

The microtubules that were long thought to be just structural features in cells have for a few decades been suspected by forward-thinking biologists to be somehow the basis for a cellular nervous system, and although the quantum computing processes outlined by Stuart Hameroff in his publications with Roger Penrose are above my pay grade, I’ll wager the cellular pre-sense I’m proposing is subserved by these structures. Microtubules are perfect tubes of molecular proteins, each shaped like a pocket able to hold an electron at distances that would enable entanglement. [url=http://quantumconsciousness.org/sites/default/files/Craddock et al Feasibility of Coherent Energy Transfer in Microtubules 2014 JR Soc Interface.pdf]Recent research[/url] has confirmed that these constantly expanding and contracting tubes, which form star-like, possibly brain-like “centrosomes” at the heart of single-celled organisms, do transport energy (which is just information) within cells according to quantum principles. It is surely no accident that they are especially abundant and complexly arrayed in neurons.

Basically, a quantum computer is a lattice or matrix of quantum-coherent atoms or particles. The most famous property of quantum computers is their greatly enhanced capacity for complex calculation by using the magic of superposition; the computer can simultaneously take multiple paths to the right answer to a problem. But increasingly it is becoming clear that quantum coherence is the same thing as entanglement; and one property of entanglement is that, insofar as entangled particles are sequestered from outside interference, time is irrelevant to the system: A measurement of one particle or atom affects its entangled partners instantaneously. Theoretically, using post-selection and weak measurement, you can even send information into a quantum computer’s past. In a previous post I described one method for doing this devised by Seth Lloyd, using quantum teleportation—essentially a version of “quantum tunneling” in the temporal rather than spatial dimension. But there may be even simpler ways of achieving this, along the lines of the Rochester experiment.

Basically, a quantum computer is a lattice or matrix of quantum-coherent atoms or particles. The most famous property of quantum computers is their greatly enhanced capacity for complex calculation by using the magic of superposition; the computer can simultaneously take multiple paths to the right answer to a problem. But increasingly it is becoming clear that quantum coherence is the same thing as entanglement; and one property of entanglement is that, insofar as entangled particles are sequestered from outside interference, time is irrelevant to the system: A measurement of one particle or atom affects its entangled partners instantaneously. Theoretically, using post-selection and weak measurement, you can even send information into a quantum computer’s past. In a previous post I described one method for doing this devised by Seth Lloyd, using quantum teleportation—essentially a version of “quantum tunneling” in the temporal rather than spatial dimension. But there may be even simpler ways of achieving this, along the lines of the Rochester experiment.Conceptually, it is not unlike a simple eye, but in the time dimension instead of space.

Imaging the Future

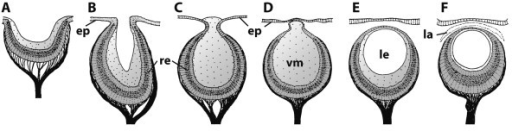

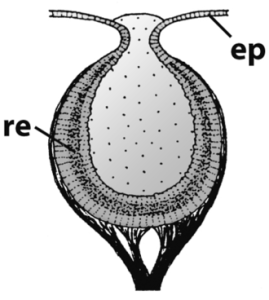

Consider the most basic type of eye in the animal kingdom: an enclosed pit with a photoreceptive retina at the bottom. In the absence of such a pit, there is very little a photoreceptor array can determine about the environment: It can tell you the presence of light and its intensity or frequency, but it cannot “image” the environment. This is a bit analogous to how the back-flowing influence of future interactions is interpreted by us as randomness or chance—generally we can’t say anything more specific about it, so it appears as a kind of noise that, at most, can be quantified (i.e., probability).

No grandfathers were harmed in the making of this precognitive biological guidance system.

But when you do what evolution gradually did (many, many independent times), which is set photoreceptors inside a recess that is mostly enclosed except for a small pupil-like opening overhead, akin to the aperture in a camera, you actually gain much more information from the in-falling light even though you have eliminated most of that light in doing so. Even in the absence of a magnifying lens (which came later and improves the photon-gathering capacity), a narrow aperture acts as a pinhole camera to project an inverted image onto the photoreceptive cells. All the sudden, you have the ability to capture a picture, a re-presentation, of what is outside in the environment, such as a predator or prey.

The pupil, the aperture, is a “selector” of light rays—by selecting only a small bundle of rays, it generates much more coherent information about energetic events unfolding in space, facilitating what Alfred Korzybski called space- and energy-binding (i.e., finding food). The basic pre-sense subserved by intracellular quantum computing would be, in contrast, a temporal sense, a time eye, which amounts to an ability to skew behavioral options in the direction of a “right answer”—a reward that lies ahead of the organism in time. It is a “right answer detector”—in other words, a post-selector, and thus a time-binder. To understand how it works, just turn the simple optical eye sideways, along the x-axis of time instead of the y-axis of space. Instead of a rain of light being constrained by a narrow aperture to form a coherent image on the surface below it, the rain of future causal influence in a sensitive quantum computer needs to be constrained at time point B to form a coherent “image” at the earlier time point A. Suddenly that random noise of future influence becomes coherent and carries information that is meaningful for the organism.

The pupil, the aperture, is a “selector” of light rays—by selecting only a small bundle of rays, it generates much more coherent information about energetic events unfolding in space, facilitating what Alfred Korzybski called space- and energy-binding (i.e., finding food). The basic pre-sense subserved by intracellular quantum computing would be, in contrast, a temporal sense, a time eye, which amounts to an ability to skew behavioral options in the direction of a “right answer”—a reward that lies ahead of the organism in time. It is a “right answer detector”—in other words, a post-selector, and thus a time-binder. To understand how it works, just turn the simple optical eye sideways, along the x-axis of time instead of the y-axis of space. Instead of a rain of light being constrained by a narrow aperture to form a coherent image on the surface below it, the rain of future causal influence in a sensitive quantum computer needs to be constrained at time point B to form a coherent “image” at the earlier time point A. Suddenly that random noise of future influence becomes coherent and carries information that is meaningful for the organism.In other words, to create a time eye, evolution needed to do do exactly what the experimenters at Rochester did: create a system that weakly measures some particles and then measures a subset of them a second time. This requires the system to have a “measuring presence” at two points in time, not just one, the same way a primitive eye requires bodily tissue at two different distances from the external “seen” object (the retina and the pupil). That is no problem at all for an organism that is continuous in time just as it has extension in space.

Most minimally, the constraint, the “aperture” in this system, would be another measurement at a later time point, which is only possible if the organism has survived that long. Survival at time point B causes a detectable perturbation or deviation of a particle’s behavior at a prior time point A inside a microtubule quantum computer. A single such temporal “circuit” would not provide much useful information; but an array of such circuits representing multiple options in a decision-space, operating in tandem, would be a meaningful guidance system, orienting the organism toward that positive outcome: for instance, moving to the left versus moving to the right. If a move to the left sends an “I survived” message back a few microseconds in time, by causing a detectable perturbation or deviation in an electron’s spin, whereas a move to the right causes no such a deviation, and the organism is wired to automatically favor the option with the deviation, then this system—multiple precognitive circuits or “future eyes” linked together to guide behavior—will tend to produce “the correct answer” at a greater than statistically random frequency.

My guess is this is probably (at the crudest level) how microtubules are working as a cellular guidance system. All later “precognitive” systems, such as in the planarian brain or the human brain, are built on this basic platform. It is the basis of all intuition, for instance, and as I’ve suggested, the real nature of the unconscious: The brain, via 86 billion classically linked neurons, each controlled by myriad little quantum computers, is a mega-quantum computer that computes literally four-dimensionally, across its timespan, detecting (faintly) the future at multiple temporal distances out from the present, via post-selection. The “future” that each neuron is detecting is its own future behavior, nothing more—or, perhaps, those microtubules are conditioning the signaling at specific synapses, such that it is really individual synaptic connections that either tend to “survive” (by being potentiated/reinforced) or not. In either case, when assembled into a complex array of interlinked neurons, whole “representations” of future emotions and cognitive states can potentially be projected into the past—or at the very least, the behavior of neural circuits can be mildly conditioned or perturbed by those circuits’ future behavior in response to external stimuli, just as they are strongly conditioned or perturbed by past behavior in the form of the long-term potentiation that subserves memory.

In a complex brain where countless circuits compete for influence in various opponent systems, “reward” has replaced “survival” as the most relevant signal. Right neuronal/synaptic answers are rewarded. This basic orientation toward reward, coupled with its largely unconscious functioning, is why time paradoxes are not an issue for this future detector: There is no tendency to act to foreclose an unconsciously “foreseen” or fore-sensed outcome. Also, as in the experimental systems using weak measurement, the future signal remains noisy, more a “majority report” than anything coherent and unmistakeable. There is lots of room for error, and thus no grandfathers were harmed in the making of this precognitive biological guidance system.

The Planarian Effect

Although this theory has its hand-wavy elements—we still don’t know exactly how quantum computing works in microtubules—it is a lot less hand-wavy than “syntropy” and “morphic resonance” as accounts of how complex systems orient toward orderly future outcomes. We need not imagine anything intrinsic in the fabric of space, time, and causality that gives rise to “attractors” in the future. Order and complexity are mediated by precognitive cellular intelligence, and at higher levels, by brains. The already unimaginably complex classical interconnection of neurons that we can crudely study with today’s imaging tools is just one level of information processing, but it is built over a more fundamental level of quantum computing that we still have not even begun to map.

Peering into my future-scope, I can see that “post-selection” is going to be the concept du jour of the next decade.

And if I am right, we will no longer need to appeal to even hand-wavier notions of “transcendent mind” or “extended consciousness” to explain our basic pre-sense. As I’ve said again and again on this blog, consciousness (whatever it is or isn’t) is a big maguffin, the fake rabbit all the dogs are chasing around the racetrack. But we should applaud the chase, and even place bets, because the search for quantum consciousness will, as an unintended byproduct, fill in the nitty-gritty details of how the basic biological future-detector, the time eye, works … and thus make all the psi-skeptics eat crow.

Peering into my future-scope, I can see that “post-selection,” the causal Darwinism that makes the time eye possible without paradox, is going to be the concept du jour of the next decade, the way Gleick’s “butterfly effect” was the concept du jour of the late 1980s. Maybe they will call it the “planarian effect,” for the way a loud noise at 3:00 PM affects the waggle of a worm at 2:59.*

Peering into my future-scope, I can see that “post-selection,” the causal Darwinism that makes the time eye possible without paradox, is going to be the concept du jour of the next decade, the way Gleick’s “butterfly effect” was the concept du jour of the late 1980s. Maybe they will call it the “planarian effect,” for the way a loud noise at 3:00 PM affects the waggle of a worm at 2:59.*NOTE:

*The previous most interesting discovery about planarian worms was made by memory researcher James McConnell back in the 1960s: If you subject one planarian worm to conditioned learning, then grind it up and feed it to another unconditioned worm, the cannibal worm acquires the first worm’s memories (see “[url=http://mypage.siu.edu/gmrose/Media/570q/McConnell 1962.pdf]Memory Transfer Through Cannibalism in Planarians[/url]“). Don’t try this at home. Unfortunately, McConnell’s methods and findings were subsequently criticized, but recent studies do show that decapitated planarians can grow new heads with their old memories intact, suggesting that their memories may indeed somehow be chemically rather than neuronally encoded, and not entirely in their wee brains.

Thanks to Eric at: http://thenightshirt.com

Sat Mar 23, 2024 11:33 pm by globalturbo

Sat Mar 23, 2024 11:33 pm by globalturbo