The terrible truth about Alexa: It is made to spy on us

By

Strange Sounds

-

May 5, 2019

This week, I read through a history of everything I’ve said to Alexa, and it felt a little bit like reading an old diary. Until I remembered that the things I’ve told Alexa in private are stored on an Amazon server and have possibly been read by an Amazon employee. This is all to make Alexa better, the company keeps saying. However, to many people, it’s not immediately obvious how humans interacting with your seemingly private voice commands is anything other than surveillance. Alexa, these people say, is a spy hiding in a wiretapping device.

Alexa is spying on you! Picture via Amazon

Alexa is spying on you! Picture via Amazon

The debate over whether or not Alexa or any voice assistant is spying on us is years old at this point, and it’s not going away. Privacy advocates have filed a complaint with the Federal Trade Commission (FTC) alleging these devices violate the Federal Wiretap Act. Journalists have investigated the dangers of always-on microphones and artificially intelligent voice assistants. Skeptical tech bloggers like me have argued these things were more powerful than people realized and overloaded with privacy violations. Recent news stories about how Amazon employees review certain Alexa commands suggest the situation is worse than we thought.

It’s starting to feel like Alexa and other voice assistants are destined to spy on us because that’s how the system was designed to work. These systems rely on machine learning and artificial intelligence to improve themselves over time. The new technology underpinning them is also currently prone to error, and even if they were perfect, the data-hungry companies that built them are constantly thinking of new ways to exploit users for profit. And where imperfect technology and powerful companies collide, the government tends to struggle so much with understanding what’s going on, that regulation seems like an impossible solution.

The situation isn’t completely dire. This technology could be really cool, if we pay closer attention to what’s happening. Which, again, is pretty damn complicated.

The extent to which false positives are a problem became glaringly evident the moment I started reading through my history of Alexa commands on Amazon’s website. Most of the entries are dull: “Hey Alexa;” “Show me an omelet recipe;” “What’s up?” But sprinkled amongst the mundane dribble was also a daunting series of messages that said, “Text not available—audio was not intended for Alexa.” Every time I saw it, I saw it twice again and read it aloud in my head: “Audio was not intended for Alexa.” These are the things Alexa heard that it should not have heard, commands that have been sent to Amazon’s servers and sent back because the machine decided the wake word had not been said or that Alexa had recorded audio when the user wasn’t giving a command. In other words, they’re errors.

Alexa was making a lot of error last August. via Gizmodo

Alexa was making a lot of error last August. via Gizmodo

At face value, voice assistants picking up stray audio is an inevitable defect in the technology. The very sophisticated computer program that can understand anything you say is hiding behind a very simple one that’s been trained to hear a wake word and then send whatever commands come after that to the smarter computer. The problem is that the simple computer often doesn’t work right, and people don’t always know that there’s a recording device in the room. That’s how we get Echo-based nightmares like the Oregon couple who inadvertently sent a recording of an entire conversation to an acquaintance. Amazon itself has been working on improvements to lower the error rate with wake words, but it’s hard to imagine that the system will ever be flawless.

“That’s the scary thing: there is a microphone in your house, and you do not have final control over when it gets activated,” Dr. Jeremy Gillula, tech projects director at the Electronic Frontier Foundation (EFF), told me. “From my perspective, that’s problematic from a privacy point of view.”

This sort of thing happening is bad luck, although it’s more common than most people would like. What’s perhaps worse than glitches is the very intentional behind-the-scenes workflow that reveals users’ interactions with voice assistants to strangers. Bloomberg recently reported that a team of Amazon employees has access to Alexa users’ geographic coordinates and that this data was collected to improve the voice assistant’s abilities. This revelation came just a couple of weeks after Bloomberg also reported that thousands of people employed by Amazon around the world analyze users’ Alexa commands to train the software. They can overhear compromising situations, and in some cases, the Amazon employees make fun of what people say.

Amazon pushed back hard against these reports. A company spokesperson told me that Amazon only annotates “an extremely small number of interactions from a random set of customers in order to improve the customer experience.” These recordings are kept in a protected system that uses multi-factor authentication so that “a limited number” of carefully monitored employees can gain access. Bloomberg suggests that the team numbers in the thousands.

But for Alexa and other artificially intelligent voice assistants to work, some human review is necessary. This training could prevent future errors and lead to better features. Amazon isn’t the only company using humans to review voice commands, either. Google and Apple also employ teams of people to review what users say to their voice assistants to train the software to understand people better and to develop new features. Sure, the human element of these apparently computer-based services is creepy, but it’s also an essential part of how these technologies are developed.

“In the end, for really hard cases, you need a human to tell you what was going on,” Dr. Alex Rudnicky, a computer scientist at Carnegie Mellon University, said in an interview. Rudnicky’s been developing speech recognition software since the 1980s and has led teams competing in the Alexa Prize, an Amazon-sponsored contest for conversational artificial intelligence. While he maintains that humans are necessary for improving natural language processing, Rudnicky also believes that it’s incredibly unlikely for a voice command to get traced back to one individual.

“Once you’re one out of 10 million,” Rudnicky said, “it’s kind of hard to argue that someone’s going to find it and trace it back to you and find out stuff about you that you don’t want them to know.”

This idea doesn’t make the idea of a stranger reading your daily thoughts or knowing your location history feel any less creepy, however. It might be uncommon for a voice assistant to record me accidentally, but the systems don’t yet seem smart enough to wake up with 100 percent accuracy. The fact that Amazon catalogs and makes available all the Alexa recordings it captures—accidental or otherwise—makes me feel terrible.

So I find myself circling back to a few questions. Who’s looking after the users? Why can’t I opt in to letting Amazon record my commands instead of wading through privacy settings looking for ways to stop sending my data to Amazon? And why are my options for opting out limited?

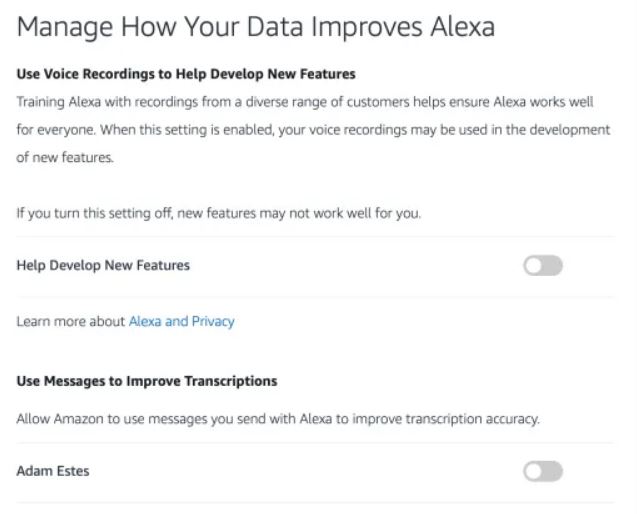

In the Alexa privacy settings, you can opt out of letting Amazon use your recordings to develop new features and improve transcriptions. You cannot opt out of allowing Amazon to retain your recordings for other purposes.

The Alexa privacy problem nobody wants to solve. via Gizmodo

The Alexa privacy problem nobody wants to solve. via Gizmodo

Settings like these put the onus on the user to protect their own privacy. If that has to be the case, why can’t these companies make my interactions with voice assistants completely anonymous?

Apple seems to be trying to do this. Whenever you talk to Siri, those commands are encrypted before they’re sent to the company with a random Siri identifier attached. Your Siri identifier is not associated with your Apple ID, so there’s no way you can open up your privacy settings on an iPhone and see what you’ve been saying to Siri. Not all Siri functionality requires your device to send information to Apple’s servers, either, so that cuts down on exposure. Apple does use recordings of Siri commands to train the software because you have to train artificially intelligent software to make it better. The fact that Apple’s not associating particular commands with a particular user might explain why so many people think Siri is terrible. Then again, Siri might be your best bet for some semblance of privacy in a voice assistant.

This is the point in the debate when Tim Cook would like to remind you that Apple is not a data company. Companies like Google and Amazon turn your personal data into products that they can sell to advertisers or use to sell you more stuff, he’d say. This is the same argument we saw from the Apple CEO when he wrote a Time Magazine column earlier this year and announced plans to push for federal privacy legislation.

The idea is starting to get some traction. In January, the Government Accountability Office released a report calling for Congress to pass comprehensive internet privacy legislation. This report joined a chorus of privacy advocates who have long argued that the United States needs its own version of GDPR. In March, the Senate Judiciary Committee heard testimony from several people who pushed for federal privacy legislation. It’s far from clear whether or not Congress will act on this idea, however.

“Speech technology has gotten so good, it is important to be worried about privacy,” said Dr. Mari Ostendorf, an electrical engineering professor and speech technology expert at the University of Washington. “And I think that probably companies are more worried about it than the U.S. government is.”

One would hope that Amazon is at least rethinking its approach to privacy and voice assistants. Because right now, it seems like the general public is only just unraveling the myriad ways that devices like the Echo are recording our lives without our permission or sharing our personal data with strangers. The most recent controversy over Alexa merely scratches the surface of how a world full of always-on microphones is an utter privacy nightmare.

The problem is that companies like these with data-driven business models have every incentive to collect as much information about their users as possible. Every time you use Alexa, for example, Amazon gets a sharper view of your interests and behavior. When I asked for specifics on how Amazon uses this data, the company gave me a weird example.

“If a customer uses Alexa to make a purchase or interact with other Amazon services, such as Amazon Music,” an Amazon spokesperson said, “we may use the fact the customer took that action in the same way we would if the customer took that action through our website or one of our apps—for instance, to provide product recommendations.”

There’s evidence these kinds of recommendations could become more sophisticated in the future. Amazon has patented technology that can interpret your emotions based on the tone and volume of your voice. According to the patent, this hypothetical version of an Alexa-like technology could tell if you’re happy or sad and deliver “highly targeted audio content, such as audio advertisements or promotions.” One could argue that the only thing holding Amazon back from releasing an ad-supported Alexa is the potential of blowback from the Echo-owning public. The government’s probably not going to stop it.

https://youtu.be/mED-ckf6kmQ

“At the end of the day all of these speakers connect back to a single entity,” Sadeh explained. “So Amazon could use voice recognition to identify you, and as a result, it could potentially build extremely extensive profiles about who you are, what you do, what your habits are, all sorts of other attributes that you would not necessarily want to disclose to them.”

He suggests Amazon could make a business out of this, knowing who you are and what you like by the mere sound of your voice. And unlike the most dystopian notions of what facial recognition could enable, voice recognition could work without ever seeing you. It could work over phone lines. In a future where internet-connected microphones are present in an ever-increasing number of rooms, a system like this could always be listening. Several of the researchers I talked to brought up this dystopian idea and lamented its imminent arrival.

Such a system is so far hypothetical, but if you think about it, all of the pieces are in place. There are tens of millions of devices full of always-on microphones all over the country, in homes as well as public places. They’re allowed to listen to and record what we say at certain times. These artificially intelligent machines are also prone to errors and will only get better by listening to us more, sometimes letting humans correct their behavior. Without any government oversight, who knows how the system will evolve from here.

We wanted a brighter future than this, didn’t we? Talking to your computer seemed like a really cool thing in the 90s, and it was definitely a significant part of the Jetsons’ lifestyle. But so far, it seems like an unavoidable truth that Alexa and other voice assistants are bound to spy on us, whether we like it or not. In a way, the technology is designed in such a way that it can’t be avoided, and in the future, without oversight, it will probably get worse.

https://youtu.be/QqU7EA3I3T0

Maybe it’s foolish to think that Amazon and the other companies building voice assistants actually are worried about privacy. Maybe they’re working on fixing the problems caused by error-prone tech, and maybe they’re working on addressing the anxiety people feel when they see that devices like the Echo are recording them, sometimes without the users’ realizing it. Heck, maybe Congress is working on laws that would hold these companies accountable.

Inevitably, the future of voice-powered computers doesn’t have to be so dystopian. Talking to our gadgets would change the way we interact with technology in the most profound ways, if everyone were on board with how it was being done. Right now, that doesn’t seem to be the case. And, ironically, the fewer people we have helping to develop tech like Alexa, the worse Alexa will be.

Amazon’s Alexa and all other similar devices are spying on us. Hope you are going to stop using them soon!

http://strangesounds.org/2019/05/alexa-spy-device-amazon-video.html

Thanks to: http://strangesounds.org

By

Strange Sounds

-

May 5, 2019

This week, I read through a history of everything I’ve said to Alexa, and it felt a little bit like reading an old diary. Until I remembered that the things I’ve told Alexa in private are stored on an Amazon server and have possibly been read by an Amazon employee. This is all to make Alexa better, the company keeps saying. However, to many people, it’s not immediately obvious how humans interacting with your seemingly private voice commands is anything other than surveillance. Alexa, these people say, is a spy hiding in a wiretapping device.

Alexa is spying on you! Picture via Amazon

Alexa is spying on you! Picture via AmazonThe debate over whether or not Alexa or any voice assistant is spying on us is years old at this point, and it’s not going away. Privacy advocates have filed a complaint with the Federal Trade Commission (FTC) alleging these devices violate the Federal Wiretap Act. Journalists have investigated the dangers of always-on microphones and artificially intelligent voice assistants. Skeptical tech bloggers like me have argued these things were more powerful than people realized and overloaded with privacy violations. Recent news stories about how Amazon employees review certain Alexa commands suggest the situation is worse than we thought.

It’s starting to feel like Alexa and other voice assistants are destined to spy on us because that’s how the system was designed to work. These systems rely on machine learning and artificial intelligence to improve themselves over time. The new technology underpinning them is also currently prone to error, and even if they were perfect, the data-hungry companies that built them are constantly thinking of new ways to exploit users for profit. And where imperfect technology and powerful companies collide, the government tends to struggle so much with understanding what’s going on, that regulation seems like an impossible solution.

The situation isn’t completely dire. This technology could be really cool, if we pay closer attention to what’s happening. Which, again, is pretty damn complicated.

Never-ending Errors

One fundamental problem with Alexa or other voice assistants is that the technology is prone to fail. Devices like the Echo come equipped with always-on microphones that are only supposed to record when you want them to listen. While some devices require the push of a physical button to beckon Alexa, many are designed to start recording you after you’ve said the wake word. Anyone who has spent any time using Alexa knows that it doesn’t always work like this. Sometimes the software hears random noise, thinks it’s the wake word, and starts recording.The extent to which false positives are a problem became glaringly evident the moment I started reading through my history of Alexa commands on Amazon’s website. Most of the entries are dull: “Hey Alexa;” “Show me an omelet recipe;” “What’s up?” But sprinkled amongst the mundane dribble was also a daunting series of messages that said, “Text not available—audio was not intended for Alexa.” Every time I saw it, I saw it twice again and read it aloud in my head: “Audio was not intended for Alexa.” These are the things Alexa heard that it should not have heard, commands that have been sent to Amazon’s servers and sent back because the machine decided the wake word had not been said or that Alexa had recorded audio when the user wasn’t giving a command. In other words, they’re errors.

Alexa was making a lot of error last August. via Gizmodo

Alexa was making a lot of error last August. via GizmodoAt face value, voice assistants picking up stray audio is an inevitable defect in the technology. The very sophisticated computer program that can understand anything you say is hiding behind a very simple one that’s been trained to hear a wake word and then send whatever commands come after that to the smarter computer. The problem is that the simple computer often doesn’t work right, and people don’t always know that there’s a recording device in the room. That’s how we get Echo-based nightmares like the Oregon couple who inadvertently sent a recording of an entire conversation to an acquaintance. Amazon itself has been working on improvements to lower the error rate with wake words, but it’s hard to imagine that the system will ever be flawless.

“That’s the scary thing: there is a microphone in your house, and you do not have final control over when it gets activated,” Dr. Jeremy Gillula, tech projects director at the Electronic Frontier Foundation (EFF), told me. “From my perspective, that’s problematic from a privacy point of view.”

This sort of thing happening is bad luck, although it’s more common than most people would like. What’s perhaps worse than glitches is the very intentional behind-the-scenes workflow that reveals users’ interactions with voice assistants to strangers. Bloomberg recently reported that a team of Amazon employees has access to Alexa users’ geographic coordinates and that this data was collected to improve the voice assistant’s abilities. This revelation came just a couple of weeks after Bloomberg also reported that thousands of people employed by Amazon around the world analyze users’ Alexa commands to train the software. They can overhear compromising situations, and in some cases, the Amazon employees make fun of what people say.

Amazon pushed back hard against these reports. A company spokesperson told me that Amazon only annotates “an extremely small number of interactions from a random set of customers in order to improve the customer experience.” These recordings are kept in a protected system that uses multi-factor authentication so that “a limited number” of carefully monitored employees can gain access. Bloomberg suggests that the team numbers in the thousands.

But for Alexa and other artificially intelligent voice assistants to work, some human review is necessary. This training could prevent future errors and lead to better features. Amazon isn’t the only company using humans to review voice commands, either. Google and Apple also employ teams of people to review what users say to their voice assistants to train the software to understand people better and to develop new features. Sure, the human element of these apparently computer-based services is creepy, but it’s also an essential part of how these technologies are developed.

“In the end, for really hard cases, you need a human to tell you what was going on,” Dr. Alex Rudnicky, a computer scientist at Carnegie Mellon University, said in an interview. Rudnicky’s been developing speech recognition software since the 1980s and has led teams competing in the Alexa Prize, an Amazon-sponsored contest for conversational artificial intelligence. While he maintains that humans are necessary for improving natural language processing, Rudnicky also believes that it’s incredibly unlikely for a voice command to get traced back to one individual.

“Once you’re one out of 10 million,” Rudnicky said, “it’s kind of hard to argue that someone’s going to find it and trace it back to you and find out stuff about you that you don’t want them to know.”

This idea doesn’t make the idea of a stranger reading your daily thoughts or knowing your location history feel any less creepy, however. It might be uncommon for a voice assistant to record me accidentally, but the systems don’t yet seem smart enough to wake up with 100 percent accuracy. The fact that Amazon catalogs and makes available all the Alexa recordings it captures—accidental or otherwise—makes me feel terrible.

The Privacy Problem Nobody Wants to Fix

In recent conversations, half a dozen technology and privacy experts told me that we need stronger privacy laws to tackle some of these problems with Alexa. The amount of your personal data that an Echo collects is bound by terms that Amazon sets, and the United States lacks strong federal privacy legislation, like Europe’s General Data Protection Regulation (GDPR). In other words, the companies that are building voice assistants are more or less making the rules.So I find myself circling back to a few questions. Who’s looking after the users? Why can’t I opt in to letting Amazon record my commands instead of wading through privacy settings looking for ways to stop sending my data to Amazon? And why are my options for opting out limited?

In the Alexa privacy settings, you can opt out of letting Amazon use your recordings to develop new features and improve transcriptions. You cannot opt out of allowing Amazon to retain your recordings for other purposes.

The Alexa privacy problem nobody wants to solve. via Gizmodo

The Alexa privacy problem nobody wants to solve. via GizmodoSettings like these put the onus on the user to protect their own privacy. If that has to be the case, why can’t these companies make my interactions with voice assistants completely anonymous?

Apple seems to be trying to do this. Whenever you talk to Siri, those commands are encrypted before they’re sent to the company with a random Siri identifier attached. Your Siri identifier is not associated with your Apple ID, so there’s no way you can open up your privacy settings on an iPhone and see what you’ve been saying to Siri. Not all Siri functionality requires your device to send information to Apple’s servers, either, so that cuts down on exposure. Apple does use recordings of Siri commands to train the software because you have to train artificially intelligent software to make it better. The fact that Apple’s not associating particular commands with a particular user might explain why so many people think Siri is terrible. Then again, Siri might be your best bet for some semblance of privacy in a voice assistant.

This is the point in the debate when Tim Cook would like to remind you that Apple is not a data company. Companies like Google and Amazon turn your personal data into products that they can sell to advertisers or use to sell you more stuff, he’d say. This is the same argument we saw from the Apple CEO when he wrote a Time Magazine column earlier this year and announced plans to push for federal privacy legislation.

The idea is starting to get some traction. In January, the Government Accountability Office released a report calling for Congress to pass comprehensive internet privacy legislation. This report joined a chorus of privacy advocates who have long argued that the United States needs its own version of GDPR. In March, the Senate Judiciary Committee heard testimony from several people who pushed for federal privacy legislation. It’s far from clear whether or not Congress will act on this idea, however.

“Speech technology has gotten so good, it is important to be worried about privacy,” said Dr. Mari Ostendorf, an electrical engineering professor and speech technology expert at the University of Washington. “And I think that probably companies are more worried about it than the U.S. government is.”

One would hope that Amazon is at least rethinking its approach to privacy and voice assistants. Because right now, it seems like the general public is only just unraveling the myriad ways that devices like the Echo are recording our lives without our permission or sharing our personal data with strangers. The most recent controversy over Alexa merely scratches the surface of how a world full of always-on microphones is an utter privacy nightmare.

The problem is that companies like these with data-driven business models have every incentive to collect as much information about their users as possible. Every time you use Alexa, for example, Amazon gets a sharper view of your interests and behavior. When I asked for specifics on how Amazon uses this data, the company gave me a weird example.

“If a customer uses Alexa to make a purchase or interact with other Amazon services, such as Amazon Music,” an Amazon spokesperson said, “we may use the fact the customer took that action in the same way we would if the customer took that action through our website or one of our apps—for instance, to provide product recommendations.”

There’s evidence these kinds of recommendations could become more sophisticated in the future. Amazon has patented technology that can interpret your emotions based on the tone and volume of your voice. According to the patent, this hypothetical version of an Alexa-like technology could tell if you’re happy or sad and deliver “highly targeted audio content, such as audio advertisements or promotions.” One could argue that the only thing holding Amazon back from releasing an ad-supported Alexa is the potential of blowback from the Echo-owning public. The government’s probably not going to stop it.

https://youtu.be/mED-ckf6kmQ

The Frightening Future

A future without more oversight could get very Philip K. Dickian, very quickly. I recently spoke with Dr. Norman Sadeh, a computer science professor at Carnegie Mellon, who painted a grim picture of what a future without better privacy regulation could look like.“At the end of the day all of these speakers connect back to a single entity,” Sadeh explained. “So Amazon could use voice recognition to identify you, and as a result, it could potentially build extremely extensive profiles about who you are, what you do, what your habits are, all sorts of other attributes that you would not necessarily want to disclose to them.”

He suggests Amazon could make a business out of this, knowing who you are and what you like by the mere sound of your voice. And unlike the most dystopian notions of what facial recognition could enable, voice recognition could work without ever seeing you. It could work over phone lines. In a future where internet-connected microphones are present in an ever-increasing number of rooms, a system like this could always be listening. Several of the researchers I talked to brought up this dystopian idea and lamented its imminent arrival.

Such a system is so far hypothetical, but if you think about it, all of the pieces are in place. There are tens of millions of devices full of always-on microphones all over the country, in homes as well as public places. They’re allowed to listen to and record what we say at certain times. These artificially intelligent machines are also prone to errors and will only get better by listening to us more, sometimes letting humans correct their behavior. Without any government oversight, who knows how the system will evolve from here.

We wanted a brighter future than this, didn’t we? Talking to your computer seemed like a really cool thing in the 90s, and it was definitely a significant part of the Jetsons’ lifestyle. But so far, it seems like an unavoidable truth that Alexa and other voice assistants are bound to spy on us, whether we like it or not. In a way, the technology is designed in such a way that it can’t be avoided, and in the future, without oversight, it will probably get worse.

https://youtu.be/QqU7EA3I3T0

Maybe it’s foolish to think that Amazon and the other companies building voice assistants actually are worried about privacy. Maybe they’re working on fixing the problems caused by error-prone tech, and maybe they’re working on addressing the anxiety people feel when they see that devices like the Echo are recording them, sometimes without the users’ realizing it. Heck, maybe Congress is working on laws that would hold these companies accountable.

Inevitably, the future of voice-powered computers doesn’t have to be so dystopian. Talking to our gadgets would change the way we interact with technology in the most profound ways, if everyone were on board with how it was being done. Right now, that doesn’t seem to be the case. And, ironically, the fewer people we have helping to develop tech like Alexa, the worse Alexa will be.

Amazon’s Alexa and all other similar devices are spying on us. Hope you are going to stop using them soon!

http://strangesounds.org/2019/05/alexa-spy-device-amazon-video.html

Thanks to: http://strangesounds.org

Sat Mar 23, 2024 11:33 pm by globalturbo

Sat Mar 23, 2024 11:33 pm by globalturbo